mirror of

https://github.com/1f349/dendrite.git

synced 2025-04-04 19:25:05 +01:00

New documentation: https://matrix-org.github.io/dendrite/

This commit is contained in:

parent

9599b3686e

commit

19a9166eb0

7

.gitignore

vendored

7

.gitignore

vendored

@ -41,6 +41,10 @@ _testmain.go

|

|||||||

*.test

|

*.test

|

||||||

*.prof

|

*.prof

|

||||||

*.wasm

|

*.wasm

|

||||||

|

*.aar

|

||||||

|

*.jar

|

||||||

|

*.framework

|

||||||

|

*.xcframework

|

||||||

|

|

||||||

# Generated keys

|

# Generated keys

|

||||||

*.pem

|

*.pem

|

||||||

@ -65,4 +69,7 @@ test/wasm/node_modules

|

|||||||

# Ignore complement folder when running locally

|

# Ignore complement folder when running locally

|

||||||

complement/

|

complement/

|

||||||

|

|

||||||

|

# Stuff from GitHub Pages

|

||||||

|

docs/_site

|

||||||

|

|

||||||

media_store/

|

media_store/

|

||||||

|

|||||||

@ -1,4 +1,5 @@

|

|||||||

# Dendrite

|

# Dendrite

|

||||||

|

|

||||||

[](https://github.com/matrix-org/dendrite/actions/workflows/dendrite.yml) [](https://matrix.to/#/#dendrite:matrix.org) [](https://matrix.to/#/#dendrite-dev:matrix.org)

|

[](https://github.com/matrix-org/dendrite/actions/workflows/dendrite.yml) [](https://matrix.to/#/#dendrite:matrix.org) [](https://matrix.to/#/#dendrite-dev:matrix.org)

|

||||||

|

|

||||||

Dendrite is a second-generation Matrix homeserver written in Go.

|

Dendrite is a second-generation Matrix homeserver written in Go.

|

||||||

@ -52,7 +53,7 @@ The [Federation Tester](https://federationtester.matrix.org) can be used to veri

|

|||||||

|

|

||||||

## Get started

|

## Get started

|

||||||

|

|

||||||

If you wish to build a fully-federating Dendrite instance, see [INSTALL.md](docs/INSTALL.md). For running in Docker, see [build/docker](build/docker).

|

If you wish to build a fully-federating Dendrite instance, see [the Installation documentation](docs/installation). For running in Docker, see [build/docker](build/docker).

|

||||||

|

|

||||||

The following instructions are enough to get Dendrite started as a non-federating test deployment using self-signed certificates and SQLite databases:

|

The following instructions are enough to get Dendrite started as a non-federating test deployment using self-signed certificates and SQLite databases:

|

||||||

|

|

||||||

|

|||||||

@ -1,60 +0,0 @@

|

|||||||

# Code Style

|

|

||||||

|

|

||||||

In addition to standard Go code style (`gofmt`, `goimports`), we use `golangci-lint`

|

|

||||||

to run a number of linters, the exact list can be found under linters in [.golangci.yml](.golangci.yml).

|

|

||||||

[Installation](https://github.com/golangci/golangci-lint#install-golangci-lint) and [Editor

|

|

||||||

Integration](https://golangci-lint.run/usage/integrations/#editor-integration) for

|

|

||||||

it can be found in the readme of golangci-lint.

|

|

||||||

|

|

||||||

For rare cases where a linter is giving a spurious warning, it can be disabled

|

|

||||||

for that line or statement using a [comment

|

|

||||||

directive](https://golangci-lint.run/usage/false-positives/#nolint), e.g. `var

|

|

||||||

bad_name int //nolint:golint,unused`. This should be used sparingly and only

|

|

||||||

when its clear that the lint warning is spurious.

|

|

||||||

|

|

||||||

The linters can be run using [build/scripts/find-lint.sh](/build/scripts/find-lint.sh)

|

|

||||||

(see file for docs) or as part of a build/test/lint cycle using

|

|

||||||

[build/scripts/build-test-lint.sh](/build/scripts/build-test-lint.sh).

|

|

||||||

|

|

||||||

|

|

||||||

## Labels

|

|

||||||

|

|

||||||

In addition to `TODO` and `FIXME` we also use `NOTSPEC` to identify deviations

|

|

||||||

from the Matrix specification.

|

|

||||||

|

|

||||||

## Logging

|

|

||||||

|

|

||||||

We generally prefer to log with static log messages and include any dynamic

|

|

||||||

information in fields.

|

|

||||||

|

|

||||||

```golang

|

|

||||||

logger := util.GetLogger(ctx)

|

|

||||||

|

|

||||||

// Not recommended

|

|

||||||

logger.Infof("Finished processing keys for %s, number of keys %d", name, numKeys)

|

|

||||||

|

|

||||||

// Recommended

|

|

||||||

logger.WithFields(logrus.Fields{

|

|

||||||

"numberOfKeys": numKeys,

|

|

||||||

"entityName": name,

|

|

||||||

}).Info("Finished processing keys")

|

|

||||||

```

|

|

||||||

|

|

||||||

This is useful when logging to systems that natively understand log fields, as

|

|

||||||

it allows people to search and process the fields without having to parse the

|

|

||||||

log message.

|

|

||||||

|

|

||||||

|

|

||||||

## Visual Studio Code

|

|

||||||

|

|

||||||

If you use VSCode then the following is an example of a workspace setting that

|

|

||||||

sets up linting correctly:

|

|

||||||

|

|

||||||

```json

|

|

||||||

{

|

|

||||||

"go.lintTool":"golangci-lint",

|

|

||||||

"go.lintFlags": [

|

|

||||||

"--fast"

|

|

||||||

]

|

|

||||||

}

|

|

||||||

```

|

|

||||||

@ -1,55 +1,103 @@

|

|||||||

|

---

|

||||||

|

title: Contributing

|

||||||

|

parent: Development

|

||||||

|

permalink: /development/contributing

|

||||||

|

---

|

||||||

|

|

||||||

# Contributing to Dendrite

|

# Contributing to Dendrite

|

||||||

|

|

||||||

Everyone is welcome to contribute to Dendrite! We aim to make it as easy as

|

Everyone is welcome to contribute to Dendrite! We aim to make it as easy as

|

||||||

possible to get started.

|

possible to get started.

|

||||||

|

|

||||||

Please ensure that you sign off your contributions! See [Sign Off](#sign-off)

|

## Sign off

|

||||||

section below.

|

|

||||||

|

We ask that everyone who contributes to the project signs off their contributions

|

||||||

|

in accordance with the [DCO](https://github.com/matrix-org/matrix-spec/blob/main/CONTRIBUTING.rst#sign-off).

|

||||||

|

In effect, this means adding a statement to your pull requests or commit messages

|

||||||

|

along the lines of:

|

||||||

|

|

||||||

|

```

|

||||||

|

Signed-off-by: Full Name <email address>

|

||||||

|

```

|

||||||

|

|

||||||

|

Unfortunately we can't accept contributions without it.

|

||||||

|

|

||||||

## Getting up and running

|

## Getting up and running

|

||||||

|

|

||||||

See [INSTALL.md](INSTALL.md) for instructions on setting up a running dev

|

See the [Installation](INSTALL.md) section for information on how to build an

|

||||||

instance of dendrite, and [CODE_STYLE.md](CODE_STYLE.md) for the code style

|

instance of Dendrite. You will likely need this in order to test your changes.

|

||||||

guide.

|

|

||||||

|

|

||||||

We use [golangci-lint](https://github.com/golangci/golangci-lint) to lint

|

## Code style

|

||||||

Dendrite which can be executed via:

|

|

||||||

|

|

||||||

|

On the whole, the format as prescribed by `gofmt`, `goimports` etc. is exactly

|

||||||

|

what we use and expect. Please make sure that you run one of these formatters before

|

||||||

|

submitting your contribution.

|

||||||

|

|

||||||

|

## Comments

|

||||||

|

|

||||||

|

Please make sure that the comments adequately explain *why* your code does what it

|

||||||

|

does. If there are statements that are not obvious, please comment what they do.

|

||||||

|

|

||||||

|

We also have some special tags which we use for searchability. These are:

|

||||||

|

|

||||||

|

* `// TODO:` for places where a future review, rewrite or refactor is likely required;

|

||||||

|

* `// FIXME:` for places where we know there is an outstanding bug that needs a fix;

|

||||||

|

* `// NOTSPEC:` for places where the behaviour specifically does not match what the

|

||||||

|

[Matrix Specification](https://spec.matrix.org/) prescribes, along with a description

|

||||||

|

of *why* that is the case.

|

||||||

|

|

||||||

|

## Linting

|

||||||

|

|

||||||

|

We use [golangci-lint](https://github.com/golangci/golangci-lint) to lint Dendrite

|

||||||

|

which can be executed via:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

golangci-lint run

|

||||||

```

|

```

|

||||||

$ golangci-lint run

|

|

||||||

```

|

If you are receiving linter warnings that you are certain are spurious and want to

|

||||||

|

silence them, you can annotate the relevant lines or methods with a `// nolint:`

|

||||||

|

comment. Please avoid doing this if you can.

|

||||||

|

|

||||||

|

## Unit tests

|

||||||

|

|

||||||

We also have unit tests which we run via:

|

We also have unit tests which we run via:

|

||||||

|

|

||||||

```

|

```bash

|

||||||

$ go test ./...

|

go test ./...

|

||||||

```

|

```

|

||||||

|

|

||||||

## Continuous Integration

|

In general, we like submissions that come with tests. Anything that proves that the

|

||||||

|

code is functioning as intended is great, and to ensure that we will find out quickly

|

||||||

|

in the future if any regressions happen.

|

||||||

|

|

||||||

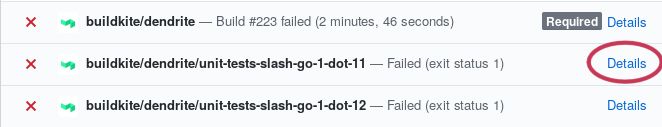

When a Pull Request is submitted, continuous integration jobs are run

|

We use the standard [Go testing package](https://gobyexample.com/testing) for this,

|

||||||

automatically to ensure the code builds and is relatively well-written. The jobs

|

alongside some helper functions in our own [`test` package](https://pkg.go.dev/github.com/matrix-org/dendrite/test).

|

||||||

are run on [Buildkite](https://buildkite.com/matrix-dot-org/dendrite/), and the

|

|

||||||

Buildkite pipeline configuration can be found in Matrix.org's [pipelines

|

|

||||||

repository](https://github.com/matrix-org/pipelines).

|

|

||||||

|

|

||||||

If a job fails, click the "details" button and you should be taken to the job's

|

## Continuous integration

|

||||||

logs.

|

|

||||||

|

|

||||||

|

by GitHub actions to ensure that the code builds and works in a number of configurations,

|

||||||

|

such as different Go versions, using full HTTP APIs and both database engines.

|

||||||

|

CI will automatically run the unit tests (as above) as well as both of our integration

|

||||||

|

test suites ([Complement](https://github.com/matrix-org/complement) and

|

||||||

|

[SyTest](https://github.com/matrix-org/sytest)).

|

||||||

|

|

||||||

Scroll down to the failing step and you should see some log output. Scan the

|

You can see the progress of any CI jobs at the bottom of the Pull Request page, or by

|

||||||

logs until you find what it's complaining about, fix it, submit a new commit,

|

looking at the [Actions](https://github.com/matrix-org/dendrite/actions) tab of the Dendrite

|

||||||

then rinse and repeat until CI passes.

|

repository.

|

||||||

|

|

||||||

### Running CI Tests Locally

|

We generally won't accept a submission unless all of the CI jobs are passing. We

|

||||||

|

do understand though that sometimes the tests get things wrong — if that's the case,

|

||||||

|

please also raise a pull request to fix the relevant tests!

|

||||||

|

|

||||||

|

### Running CI tests locally

|

||||||

|

|

||||||

To save waiting for CI to finish after every commit, it is ideal to run the

|

To save waiting for CI to finish after every commit, it is ideal to run the

|

||||||

checks locally before pushing, fixing errors first. This also saves other people

|

checks locally before pushing, fixing errors first. This also saves other people

|

||||||

time as only so many PRs can be tested at a given time.

|

time as only so many PRs can be tested at a given time.

|

||||||

|

|

||||||

To execute what Buildkite tests, first run `./build/scripts/build-test-lint.sh`; this

|

To execute what CI tests, first run `./build/scripts/build-test-lint.sh`; this

|

||||||

script will build the code, lint it, and run `go test ./...` with race condition

|

script will build the code, lint it, and run `go test ./...` with race condition

|

||||||

checking enabled. If something needs to be changed, fix it and then run the

|

checking enabled. If something needs to be changed, fix it and then run the

|

||||||

script again until it no longer complains. Be warned that the linting can take a

|

script again until it no longer complains. Be warned that the linting can take a

|

||||||

@ -64,8 +112,7 @@ passing tests.

|

|||||||

If these two steps report no problems, the code should be able to pass the CI

|

If these two steps report no problems, the code should be able to pass the CI

|

||||||

tests.

|

tests.

|

||||||

|

|

||||||

|

## Picking things to do

|

||||||

## Picking Things To Do

|

|

||||||

|

|

||||||

If you're new then feel free to pick up an issue labelled [good first

|

If you're new then feel free to pick up an issue labelled [good first

|

||||||

issue](https://github.com/matrix-org/dendrite/labels/good%20first%20issue).

|

issue](https://github.com/matrix-org/dendrite/labels/good%20first%20issue).

|

||||||

@ -81,17 +128,10 @@ We ask people who are familiar with Dendrite to leave the [good first

|

|||||||

issue](https://github.com/matrix-org/dendrite/labels/good%20first%20issue)

|

issue](https://github.com/matrix-org/dendrite/labels/good%20first%20issue)

|

||||||

issues so that there is always a way for new people to come and get involved.

|

issues so that there is always a way for new people to come and get involved.

|

||||||

|

|

||||||

## Getting Help

|

## Getting help

|

||||||

|

|

||||||

For questions related to developing on Dendrite we have a dedicated room on

|

For questions related to developing on Dendrite we have a dedicated room on

|

||||||

Matrix [#dendrite-dev:matrix.org](https://matrix.to/#/#dendrite-dev:matrix.org)

|

Matrix [#dendrite-dev:matrix.org](https://matrix.to/#/#dendrite-dev:matrix.org)

|

||||||

where we're happy to help.

|

where we're happy to help.

|

||||||

|

|

||||||

For more general questions please use

|

For more general questions please use [#dendrite:matrix.org](https://matrix.to/#/#dendrite:matrix.org).

|

||||||

[#dendrite:matrix.org](https://matrix.to/#/#dendrite:matrix.org).

|

|

||||||

|

|

||||||

## Sign off

|

|

||||||

|

|

||||||

We ask that everyone who contributes to the project signs off their

|

|

||||||

contributions, in accordance with the

|

|

||||||

[DCO](https://github.com/matrix-org/matrix-spec/blob/main/CONTRIBUTING.rst#sign-off).

|

|

||||||

|

|||||||

140

docs/DESIGN.md

140

docs/DESIGN.md

@ -1,140 +0,0 @@

|

|||||||

# Design

|

|

||||||

|

|

||||||

## Log Based Architecture

|

|

||||||

|

|

||||||

### Decomposition and Decoupling

|

|

||||||

|

|

||||||

A matrix homeserver can be built around append-only event logs built from the

|

|

||||||

messages, receipts, presence, typing notifications, device messages and other

|

|

||||||

events sent by users on the homeservers or by other homeservers.

|

|

||||||

|

|

||||||

The server would then decompose into two categories: writers that add new

|

|

||||||

entries to the logs and readers that read those entries.

|

|

||||||

|

|

||||||

The event logs then serve to decouple the two components, the writers and

|

|

||||||

readers need only agree on the format of the entries in the event log.

|

|

||||||

This format could be largely derived from the wire format of the events used

|

|

||||||

in the client and federation protocols:

|

|

||||||

|

|

||||||

|

|

||||||

C-S API +---------+ Event Log +---------+ C-S API

|

|

||||||

---------> | |+ (e.g. kafka) | |+ --------->

|

|

||||||

| Writers || =============> | Readers ||

|

|

||||||

---------> | || | || --------->

|

|

||||||

S-S API +---------+| +---------+| S-S API

|

|

||||||

+---------+ +---------+

|

|

||||||

|

|

||||||

However the way matrix handles state events in a room creates a few

|

|

||||||

complications for this model.

|

|

||||||

|

|

||||||

1) Writers require the room state at an event to check if it is allowed.

|

|

||||||

2) Readers require the room state at an event to determine the users and

|

|

||||||

servers that are allowed to see the event.

|

|

||||||

3) A client can query the current state of the room from a reader.

|

|

||||||

|

|

||||||

The writers and readers cannot extract the necessary information directly from

|

|

||||||

the event logs because it would take too long to extract the information as the

|

|

||||||

state is built up by collecting individual state events from the event history.

|

|

||||||

|

|

||||||

The writers and readers therefore need access to something that stores copies

|

|

||||||

of the event state in a form that can be efficiently queried. One possibility

|

|

||||||

would be for the readers and writers to maintain copies of the current state

|

|

||||||

in local databases. A second possibility would be to add a dedicated component

|

|

||||||

that maintained the state of the room and exposed an API that the readers and

|

|

||||||

writers could query to get the state. The second has the advantage that the

|

|

||||||

state is calculated and stored in a single location.

|

|

||||||

|

|

||||||

|

|

||||||

C-S API +---------+ Log +--------+ Log +---------+ C-S API

|

|

||||||

---------> | |+ ======> | | ======> | |+ --------->

|

|

||||||

| Writers || | Room | | Readers ||

|

|

||||||

---------> | || <------ | Server | ------> | || --------->

|

|

||||||

S-S API +---------+| Query | | Query +---------+| S-S API

|

|

||||||

+---------+ +--------+ +---------+

|

|

||||||

|

|

||||||

|

|

||||||

The room server can annotate the events it logs to the readers with room state

|

|

||||||

so that the readers can avoid querying the room server unnecessarily.

|

|

||||||

|

|

||||||

[This architecture can be extended to cover most of the APIs.](WIRING.md)

|

|

||||||

|

|

||||||

## How things are supposed to work.

|

|

||||||

|

|

||||||

### Local client sends an event in an existing room.

|

|

||||||

|

|

||||||

0) The client sends a PUT `/_matrix/client/r0/rooms/{roomId}/send` request

|

|

||||||

and an HTTP loadbalancer routes the request to a ClientAPI.

|

|

||||||

|

|

||||||

1) The ClientAPI:

|

|

||||||

|

|

||||||

* Authenticates the local user using the `access_token` sent in the HTTP

|

|

||||||

request.

|

|

||||||

* Checks if it has already processed or is processing a request with the

|

|

||||||

same `txnID`.

|

|

||||||

* Calculates which state events are needed to auth the request.

|

|

||||||

* Queries the necessary state events and the latest events in the room

|

|

||||||

from the RoomServer.

|

|

||||||

* Confirms that the room exists and checks whether the event is allowed by

|

|

||||||

the auth checks.

|

|

||||||

* Builds and signs the events.

|

|

||||||

* Writes the event to a "InputRoomEvent" kafka topic.

|

|

||||||

* Send a `200 OK` response to the client.

|

|

||||||

|

|

||||||

2) The RoomServer reads the event from "InputRoomEvent" kafka topic:

|

|

||||||

|

|

||||||

* Checks if it has already has a copy of the event.

|

|

||||||

* Checks if the event is allowed by the auth checks using the auth events

|

|

||||||

at the event.

|

|

||||||

* Calculates the room state at the event.

|

|

||||||

* Works out what the latest events in the room after processing this event

|

|

||||||

are.

|

|

||||||

* Calculate how the changes in the latest events affect the current state

|

|

||||||

of the room.

|

|

||||||

* TODO: Workout what events determine the visibility of this event to other

|

|

||||||

users

|

|

||||||

* Writes the event along with the changes in current state to an

|

|

||||||

"OutputRoomEvent" kafka topic. It writes all the events for a room to

|

|

||||||

the same kafka partition.

|

|

||||||

|

|

||||||

3a) The ClientSync reads the event from the "OutputRoomEvent" kafka topic:

|

|

||||||

|

|

||||||

* Updates its copy of the current state for the room.

|

|

||||||

* Works out which users need to be notified about the event.

|

|

||||||

* Wakes up any pending `/_matrix/client/r0/sync` requests for those users.

|

|

||||||

* Adds the event to the recent timeline events for the room.

|

|

||||||

|

|

||||||

3b) The FederationSender reads the event from the "OutputRoomEvent" kafka topic:

|

|

||||||

|

|

||||||

* Updates its copy of the current state for the room.

|

|

||||||

* Works out which remote servers need to be notified about the event.

|

|

||||||

* Sends a `/_matrix/federation/v1/send` request to those servers.

|

|

||||||

* Or if there is a request in progress then add the event to a queue to be

|

|

||||||

sent when the previous request finishes.

|

|

||||||

|

|

||||||

### Remote server sends an event in an existing room.

|

|

||||||

|

|

||||||

0) The remote server sends a `PUT /_matrix/federation/v1/send` request and an

|

|

||||||

HTTP loadbalancer routes the request to a FederationReceiver.

|

|

||||||

|

|

||||||

1) The FederationReceiver:

|

|

||||||

|

|

||||||

* Authenticates the remote server using the "X-Matrix" authorisation header.

|

|

||||||

* Checks if it has already processed or is processing a request with the

|

|

||||||

same `txnID`.

|

|

||||||

* Checks the signatures for the events.

|

|

||||||

Fetches the ed25519 keys for the event senders if necessary.

|

|

||||||

* Queries the RoomServer for a copy of the state of the room at each event.

|

|

||||||

* If the RoomServer doesn't know the state of the room at an event then

|

|

||||||

query the state of the room at the event from the remote server using

|

|

||||||

`GET /_matrix/federation/v1/state_ids` falling back to

|

|

||||||

`GET /_matrix/federation/v1/state` if necessary.

|

|

||||||

* Once the state at each event is known check whether the events are

|

|

||||||

allowed by the auth checks against the state at each event.

|

|

||||||

* For each event that is allowed write the event to the "InputRoomEvent"

|

|

||||||

kafka topic.

|

|

||||||

* Send a 200 OK response to the remote server listing which events were

|

|

||||||

successfully processed and which events failed

|

|

||||||

|

|

||||||

2) The RoomServer processes the event the same as it would a local event.

|

|

||||||

|

|

||||||

3a) The ClientSync processes the event the same as it would a local event.

|

|

||||||

67

docs/FAQ.md

67

docs/FAQ.md

@ -1,26 +1,34 @@

|

|||||||

# Frequently Asked Questions

|

---

|

||||||

|

title: FAQ

|

||||||

|

nav_order: 1

|

||||||

|

permalink: /faq

|

||||||

|

---

|

||||||

|

|

||||||

### Is Dendrite stable?

|

# FAQ

|

||||||

|

|

||||||

|

## Is Dendrite stable?

|

||||||

|

|

||||||

Mostly, although there are still bugs and missing features. If you are a confident power user and you are happy to spend some time debugging things when they go wrong, then please try out Dendrite. If you are a community, organisation or business that demands stability and uptime, then Dendrite is not for you yet - please install Synapse instead.

|

Mostly, although there are still bugs and missing features. If you are a confident power user and you are happy to spend some time debugging things when they go wrong, then please try out Dendrite. If you are a community, organisation or business that demands stability and uptime, then Dendrite is not for you yet - please install Synapse instead.

|

||||||

|

|

||||||

### Is Dendrite feature-complete?

|

## Is Dendrite feature-complete?

|

||||||

|

|

||||||

No, although a good portion of the Matrix specification has been implemented. Mostly missing are client features - see the readme at the root of the repository for more information.

|

No, although a good portion of the Matrix specification has been implemented. Mostly missing are client features - see the readme at the root of the repository for more information.

|

||||||

|

|

||||||

### Is there a migration path from Synapse to Dendrite?

|

## Is there a migration path from Synapse to Dendrite?

|

||||||

|

|

||||||

No, not at present. There will be in the future when Dendrite reaches version 1.0.

|

No, not at present. There will be in the future when Dendrite reaches version 1.0. For now it is not

|

||||||

|

possible to migrate an existing Synapse deployment to Dendrite.

|

||||||

|

|

||||||

### Can I use Dendrite with an existing Synapse database?

|

## Can I use Dendrite with an existing Synapse database?

|

||||||

|

|

||||||

No, Dendrite has a very different database schema to Synapse and the two are not interchangeable.

|

No, Dendrite has a very different database schema to Synapse and the two are not interchangeable.

|

||||||

|

|

||||||

### Should I run a monolith or a polylith deployment?

|

## Should I run a monolith or a polylith deployment?

|

||||||

|

|

||||||

Monolith deployments are always preferred where possible, and at this time, are far better tested than polylith deployments are. The only reason to consider a polylith deployment is if you wish to run different Dendrite components on separate physical machines.

|

Monolith deployments are always preferred where possible, and at this time, are far better tested than polylith deployments are. The only reason to consider a polylith deployment is if you wish to run different Dendrite components on separate physical machines, but this is an advanced configuration which we don't

|

||||||

|

recommend.

|

||||||

|

|

||||||

### I've installed Dendrite but federation isn't working

|

## I've installed Dendrite but federation isn't working

|

||||||

|

|

||||||

Check the [Federation Tester](https://federationtester.matrix.org). You need at least:

|

Check the [Federation Tester](https://federationtester.matrix.org). You need at least:

|

||||||

|

|

||||||

@ -28,54 +36,57 @@ Check the [Federation Tester](https://federationtester.matrix.org). You need at

|

|||||||

* A valid TLS certificate for that DNS name

|

* A valid TLS certificate for that DNS name

|

||||||

* Either DNS SRV records or well-known files

|

* Either DNS SRV records or well-known files

|

||||||

|

|

||||||

### Does Dendrite work with my favourite client?

|

## Does Dendrite work with my favourite client?

|

||||||

|

|

||||||

It should do, although we are aware of some minor issues:

|

It should do, although we are aware of some minor issues:

|

||||||

|

|

||||||

* **Element Android**: registration does not work, but logging in with an existing account does

|

* **Element Android**: registration does not work, but logging in with an existing account does

|

||||||

* **Hydrogen**: occasionally sync can fail due to gaps in the `since` parameter, but clearing the cache fixes this

|

* **Hydrogen**: occasionally sync can fail due to gaps in the `since` parameter, but clearing the cache fixes this

|

||||||

|

|

||||||

### Does Dendrite support push notifications?

|

## Does Dendrite support push notifications?

|

||||||

|

|

||||||

Yes, we have experimental support for push notifications. Configure them in the usual way in your Matrix client.

|

Yes, we have experimental support for push notifications. Configure them in the usual way in your Matrix client.

|

||||||

|

|

||||||

### Does Dendrite support application services/bridges?

|

## Does Dendrite support application services/bridges?

|

||||||

|

|

||||||

Possibly - Dendrite does have some application service support but it is not well tested. Please let us know by raising a GitHub issue if you try it and run into problems.

|

Possibly - Dendrite does have some application service support but it is not well tested. Please let us know by raising a GitHub issue if you try it and run into problems.

|

||||||

|

|

||||||

Bridges known to work (as of v0.5.1):

|

Bridges known to work (as of v0.5.1):

|

||||||

- [Telegram](https://docs.mau.fi/bridges/python/telegram/index.html)

|

|

||||||

- [WhatsApp](https://docs.mau.fi/bridges/go/whatsapp/index.html)

|

* [Telegram](https://docs.mau.fi/bridges/python/telegram/index.html)

|

||||||

- [Signal](https://docs.mau.fi/bridges/python/signal/index.html)

|

* [WhatsApp](https://docs.mau.fi/bridges/go/whatsapp/index.html)

|

||||||

- [probably all other mautrix bridges](https://docs.mau.fi/bridges/)

|

* [Signal](https://docs.mau.fi/bridges/python/signal/index.html)

|

||||||

|

* [probably all other mautrix bridges](https://docs.mau.fi/bridges/)

|

||||||

|

|

||||||

Remember to add the config file(s) to the `app_service_api` [config](https://github.com/matrix-org/dendrite/blob/de38be469a23813921d01bef3e14e95faab2a59e/dendrite-config.yaml#L130-L131).

|

Remember to add the config file(s) to the `app_service_api` [config](https://github.com/matrix-org/dendrite/blob/de38be469a23813921d01bef3e14e95faab2a59e/dendrite-config.yaml#L130-L131).

|

||||||

|

|

||||||

### Is it possible to prevent communication with the outside world?

|

## Is it possible to prevent communication with the outside world?

|

||||||

|

|

||||||

Yes, you can do this by disabling federation - set `disable_federation` to `true` in the `global` section of the Dendrite configuration file.

|

Yes, you can do this by disabling federation - set `disable_federation` to `true` in the `global` section of the Dendrite configuration file.

|

||||||

|

|

||||||

### Should I use PostgreSQL or SQLite for my databases?

|

## Should I use PostgreSQL or SQLite for my databases?

|

||||||

|

|

||||||

Please use PostgreSQL wherever possible, especially if you are planning to run a homeserver that caters to more than a couple of users.

|

Please use PostgreSQL wherever possible, especially if you are planning to run a homeserver that caters to more than a couple of users.

|

||||||

|

|

||||||

### Dendrite is using a lot of CPU

|

## Dendrite is using a lot of CPU

|

||||||

|

|

||||||

Generally speaking, you should expect to see some CPU spikes, particularly if you are joining or participating in large rooms. However, constant/sustained high CPU usage is not expected - if you are experiencing that, please join `#dendrite-dev:matrix.org` and let us know, or file a GitHub issue.

|

Generally speaking, you should expect to see some CPU spikes, particularly if you are joining or participating in large rooms. However, constant/sustained high CPU usage is not expected - if you are experiencing that, please join `#dendrite-dev:matrix.org` and let us know what you were doing when the

|

||||||

|

CPU usage shot up, or file a GitHub issue. If you can take a [CPU profile](PROFILING.md) then that would

|

||||||

|

be a huge help too, as that will help us to understand where the CPU time is going.

|

||||||

|

|

||||||

### Dendrite is using a lot of RAM

|

## Dendrite is using a lot of RAM

|

||||||

|

|

||||||

A lot of users report that Dendrite is using a lot of RAM, sometimes even gigabytes of it. This is usually due to Go's allocator behaviour, which tries to hold onto allocated memory until the operating system wants to reclaim it for something else. This can make the memory usage look significantly inflated in tools like `top`/`htop` when actually most of that memory is not really in use at all.

|

As above with CPU usage, some memory spikes are expected if Dendrite is doing particularly heavy work

|

||||||

|

at a given instant. However, if it is using more RAM than you expect for a long time, that's probably

|

||||||

|

not expected. Join `#dendrite-dev:matrix.org` and let us know what you were doing when the memory usage

|

||||||

|

ballooned, or file a GitHub issue if you can. If you can take a [memory profile](PROFILING.md) then that

|

||||||

|

would be a huge help too, as that will help us to understand where the memory usage is happening.

|

||||||

|

|

||||||

If you want to prevent this behaviour so that the Go runtime releases memory normally, start Dendrite using the `GODEBUG=madvdontneed=1` environment variable. It is also expected that the allocator behaviour will be changed again in Go 1.16 so that it does not hold onto memory unnecessarily in this way.

|

## Dendrite is running out of PostgreSQL database connections

|

||||||

|

|

||||||

If you are running with `GODEBUG=madvdontneed=1` and still see hugely inflated memory usage then that's quite possibly a bug - please join `#dendrite-dev:matrix.org` and let us know, or file a GitHub issue.

|

|

||||||

|

|

||||||

### Dendrite is running out of PostgreSQL database connections

|

|

||||||

|

|

||||||

You may need to revisit the connection limit of your PostgreSQL server and/or make changes to the `max_connections` lines in your Dendrite configuration. Be aware that each Dendrite component opens its own database connections and has its own connection limit, even in monolith mode!

|

You may need to revisit the connection limit of your PostgreSQL server and/or make changes to the `max_connections` lines in your Dendrite configuration. Be aware that each Dendrite component opens its own database connections and has its own connection limit, even in monolith mode!

|

||||||

|

|

||||||

### What is being reported when enabling anonymous stats?

|

## What is being reported when enabling anonymous stats?

|

||||||

|

|

||||||

If anonymous stats reporting is enabled, the following data is send to the defined endpoint.

|

If anonymous stats reporting is enabled, the following data is send to the defined endpoint.

|

||||||

|

|

||||||

|

|||||||

5

docs/Gemfile

Normal file

5

docs/Gemfile

Normal file

@ -0,0 +1,5 @@

|

|||||||

|

source "https://rubygems.org"

|

||||||

|

gem "github-pages", "~> 226", group: :jekyll_plugins

|

||||||

|

group :jekyll_plugins do

|

||||||

|

gem "jekyll-feed", "~> 0.15.1"

|

||||||

|

end

|

||||||

283

docs/Gemfile.lock

Normal file

283

docs/Gemfile.lock

Normal file

@ -0,0 +1,283 @@

|

|||||||

|

GEM

|

||||||

|

remote: https://rubygems.org/

|

||||||

|

specs:

|

||||||

|

activesupport (6.0.5)

|

||||||

|

concurrent-ruby (~> 1.0, >= 1.0.2)

|

||||||

|

i18n (>= 0.7, < 2)

|

||||||

|

minitest (~> 5.1)

|

||||||

|

tzinfo (~> 1.1)

|

||||||

|

zeitwerk (~> 2.2, >= 2.2.2)

|

||||||

|

addressable (2.8.0)

|

||||||

|

public_suffix (>= 2.0.2, < 5.0)

|

||||||

|

coffee-script (2.4.1)

|

||||||

|

coffee-script-source

|

||||||

|

execjs

|

||||||

|

coffee-script-source (1.11.1)

|

||||||

|

colorator (1.1.0)

|

||||||

|

commonmarker (0.23.4)

|

||||||

|

concurrent-ruby (1.1.10)

|

||||||

|

dnsruby (1.61.9)

|

||||||

|

simpleidn (~> 0.1)

|

||||||

|

em-websocket (0.5.3)

|

||||||

|

eventmachine (>= 0.12.9)

|

||||||

|

http_parser.rb (~> 0)

|

||||||

|

ethon (0.15.0)

|

||||||

|

ffi (>= 1.15.0)

|

||||||

|

eventmachine (1.2.7)

|

||||||

|

execjs (2.8.1)

|

||||||

|

faraday (1.10.0)

|

||||||

|

faraday-em_http (~> 1.0)

|

||||||

|

faraday-em_synchrony (~> 1.0)

|

||||||

|

faraday-excon (~> 1.1)

|

||||||

|

faraday-httpclient (~> 1.0)

|

||||||

|

faraday-multipart (~> 1.0)

|

||||||

|

faraday-net_http (~> 1.0)

|

||||||

|

faraday-net_http_persistent (~> 1.0)

|

||||||

|

faraday-patron (~> 1.0)

|

||||||

|

faraday-rack (~> 1.0)

|

||||||

|

faraday-retry (~> 1.0)

|

||||||

|

ruby2_keywords (>= 0.0.4)

|

||||||

|

faraday-em_http (1.0.0)

|

||||||

|

faraday-em_synchrony (1.0.0)

|

||||||

|

faraday-excon (1.1.0)

|

||||||

|

faraday-httpclient (1.0.1)

|

||||||

|

faraday-multipart (1.0.3)

|

||||||

|

multipart-post (>= 1.2, < 3)

|

||||||

|

faraday-net_http (1.0.1)

|

||||||

|

faraday-net_http_persistent (1.2.0)

|

||||||

|

faraday-patron (1.0.0)

|

||||||

|

faraday-rack (1.0.0)

|

||||||

|

faraday-retry (1.0.3)

|

||||||

|

ffi (1.15.5)

|

||||||

|

forwardable-extended (2.6.0)

|

||||||

|

gemoji (3.0.1)

|

||||||

|

github-pages (226)

|

||||||

|

github-pages-health-check (= 1.17.9)

|

||||||

|

jekyll (= 3.9.2)

|

||||||

|

jekyll-avatar (= 0.7.0)

|

||||||

|

jekyll-coffeescript (= 1.1.1)

|

||||||

|

jekyll-commonmark-ghpages (= 0.2.0)

|

||||||

|

jekyll-default-layout (= 0.1.4)

|

||||||

|

jekyll-feed (= 0.15.1)

|

||||||

|

jekyll-gist (= 1.5.0)

|

||||||

|

jekyll-github-metadata (= 2.13.0)

|

||||||

|

jekyll-include-cache (= 0.2.1)

|

||||||

|

jekyll-mentions (= 1.6.0)

|

||||||

|

jekyll-optional-front-matter (= 0.3.2)

|

||||||

|

jekyll-paginate (= 1.1.0)

|

||||||

|

jekyll-readme-index (= 0.3.0)

|

||||||

|

jekyll-redirect-from (= 0.16.0)

|

||||||

|

jekyll-relative-links (= 0.6.1)

|

||||||

|

jekyll-remote-theme (= 0.4.3)

|

||||||

|

jekyll-sass-converter (= 1.5.2)

|

||||||

|

jekyll-seo-tag (= 2.8.0)

|

||||||

|

jekyll-sitemap (= 1.4.0)

|

||||||

|

jekyll-swiss (= 1.0.0)

|

||||||

|

jekyll-theme-architect (= 0.2.0)

|

||||||

|

jekyll-theme-cayman (= 0.2.0)

|

||||||

|

jekyll-theme-dinky (= 0.2.0)

|

||||||

|

jekyll-theme-hacker (= 0.2.0)

|

||||||

|

jekyll-theme-leap-day (= 0.2.0)

|

||||||

|

jekyll-theme-merlot (= 0.2.0)

|

||||||

|

jekyll-theme-midnight (= 0.2.0)

|

||||||

|

jekyll-theme-minimal (= 0.2.0)

|

||||||

|

jekyll-theme-modernist (= 0.2.0)

|

||||||

|

jekyll-theme-primer (= 0.6.0)

|

||||||

|

jekyll-theme-slate (= 0.2.0)

|

||||||

|

jekyll-theme-tactile (= 0.2.0)

|

||||||

|

jekyll-theme-time-machine (= 0.2.0)

|

||||||

|

jekyll-titles-from-headings (= 0.5.3)

|

||||||

|

jemoji (= 0.12.0)

|

||||||

|

kramdown (= 2.3.2)

|

||||||

|

kramdown-parser-gfm (= 1.1.0)

|

||||||

|

liquid (= 4.0.3)

|

||||||

|

mercenary (~> 0.3)

|

||||||

|

minima (= 2.5.1)

|

||||||

|

nokogiri (>= 1.13.4, < 2.0)

|

||||||

|

rouge (= 3.26.0)

|

||||||

|

terminal-table (~> 1.4)

|

||||||

|

github-pages-health-check (1.17.9)

|

||||||

|

addressable (~> 2.3)

|

||||||

|

dnsruby (~> 1.60)

|

||||||

|

octokit (~> 4.0)

|

||||||

|

public_suffix (>= 3.0, < 5.0)

|

||||||

|

typhoeus (~> 1.3)

|

||||||

|

html-pipeline (2.14.1)

|

||||||

|

activesupport (>= 2)

|

||||||

|

nokogiri (>= 1.4)

|

||||||

|

http_parser.rb (0.8.0)

|

||||||

|

i18n (0.9.5)

|

||||||

|

concurrent-ruby (~> 1.0)

|

||||||

|

jekyll (3.9.2)

|

||||||

|

addressable (~> 2.4)

|

||||||

|

colorator (~> 1.0)

|

||||||

|

em-websocket (~> 0.5)

|

||||||

|

i18n (~> 0.7)

|

||||||

|

jekyll-sass-converter (~> 1.0)

|

||||||

|

jekyll-watch (~> 2.0)

|

||||||

|

kramdown (>= 1.17, < 3)

|

||||||

|

liquid (~> 4.0)

|

||||||

|

mercenary (~> 0.3.3)

|

||||||

|

pathutil (~> 0.9)

|

||||||

|

rouge (>= 1.7, < 4)

|

||||||

|

safe_yaml (~> 1.0)

|

||||||

|

jekyll-avatar (0.7.0)

|

||||||

|

jekyll (>= 3.0, < 5.0)

|

||||||

|

jekyll-coffeescript (1.1.1)

|

||||||

|

coffee-script (~> 2.2)

|

||||||

|

coffee-script-source (~> 1.11.1)

|

||||||

|

jekyll-commonmark (1.4.0)

|

||||||

|

commonmarker (~> 0.22)

|

||||||

|

jekyll-commonmark-ghpages (0.2.0)

|

||||||

|

commonmarker (~> 0.23.4)

|

||||||

|

jekyll (~> 3.9.0)

|

||||||

|

jekyll-commonmark (~> 1.4.0)

|

||||||

|

rouge (>= 2.0, < 4.0)

|

||||||

|

jekyll-default-layout (0.1.4)

|

||||||

|

jekyll (~> 3.0)

|

||||||

|

jekyll-feed (0.15.1)

|

||||||

|

jekyll (>= 3.7, < 5.0)

|

||||||

|

jekyll-gist (1.5.0)

|

||||||

|

octokit (~> 4.2)

|

||||||

|

jekyll-github-metadata (2.13.0)

|

||||||

|

jekyll (>= 3.4, < 5.0)

|

||||||

|

octokit (~> 4.0, != 4.4.0)

|

||||||

|

jekyll-include-cache (0.2.1)

|

||||||

|

jekyll (>= 3.7, < 5.0)

|

||||||

|

jekyll-mentions (1.6.0)

|

||||||

|

html-pipeline (~> 2.3)

|

||||||

|

jekyll (>= 3.7, < 5.0)

|

||||||

|

jekyll-optional-front-matter (0.3.2)

|

||||||

|

jekyll (>= 3.0, < 5.0)

|

||||||

|

jekyll-paginate (1.1.0)

|

||||||

|

jekyll-readme-index (0.3.0)

|

||||||

|

jekyll (>= 3.0, < 5.0)

|

||||||

|

jekyll-redirect-from (0.16.0)

|

||||||

|

jekyll (>= 3.3, < 5.0)

|

||||||

|

jekyll-relative-links (0.6.1)

|

||||||

|

jekyll (>= 3.3, < 5.0)

|

||||||

|

jekyll-remote-theme (0.4.3)

|

||||||

|

addressable (~> 2.0)

|

||||||

|

jekyll (>= 3.5, < 5.0)

|

||||||

|

jekyll-sass-converter (>= 1.0, <= 3.0.0, != 2.0.0)

|

||||||

|

rubyzip (>= 1.3.0, < 3.0)

|

||||||

|

jekyll-sass-converter (1.5.2)

|

||||||

|

sass (~> 3.4)

|

||||||

|

jekyll-seo-tag (2.8.0)

|

||||||

|

jekyll (>= 3.8, < 5.0)

|

||||||

|

jekyll-sitemap (1.4.0)

|

||||||

|

jekyll (>= 3.7, < 5.0)

|

||||||

|

jekyll-swiss (1.0.0)

|

||||||

|

jekyll-theme-architect (0.2.0)

|

||||||

|

jekyll (> 3.5, < 5.0)

|

||||||

|

jekyll-seo-tag (~> 2.0)

|

||||||

|

jekyll-theme-cayman (0.2.0)

|

||||||

|

jekyll (> 3.5, < 5.0)

|

||||||

|

jekyll-seo-tag (~> 2.0)

|

||||||

|

jekyll-theme-dinky (0.2.0)

|

||||||

|

jekyll (> 3.5, < 5.0)

|

||||||

|

jekyll-seo-tag (~> 2.0)

|

||||||

|

jekyll-theme-hacker (0.2.0)

|

||||||

|

jekyll (> 3.5, < 5.0)

|

||||||

|

jekyll-seo-tag (~> 2.0)

|

||||||

|

jekyll-theme-leap-day (0.2.0)

|

||||||

|

jekyll (> 3.5, < 5.0)

|

||||||

|

jekyll-seo-tag (~> 2.0)

|

||||||

|

jekyll-theme-merlot (0.2.0)

|

||||||

|

jekyll (> 3.5, < 5.0)

|

||||||

|

jekyll-seo-tag (~> 2.0)

|

||||||

|

jekyll-theme-midnight (0.2.0)

|

||||||

|

jekyll (> 3.5, < 5.0)

|

||||||

|

jekyll-seo-tag (~> 2.0)

|

||||||

|

jekyll-theme-minimal (0.2.0)

|

||||||

|

jekyll (> 3.5, < 5.0)

|

||||||

|

jekyll-seo-tag (~> 2.0)

|

||||||

|

jekyll-theme-modernist (0.2.0)

|

||||||

|

jekyll (> 3.5, < 5.0)

|

||||||

|

jekyll-seo-tag (~> 2.0)

|

||||||

|

jekyll-theme-primer (0.6.0)

|

||||||

|

jekyll (> 3.5, < 5.0)

|

||||||

|

jekyll-github-metadata (~> 2.9)

|

||||||

|

jekyll-seo-tag (~> 2.0)

|

||||||

|

jekyll-theme-slate (0.2.0)

|

||||||

|

jekyll (> 3.5, < 5.0)

|

||||||

|

jekyll-seo-tag (~> 2.0)

|

||||||

|

jekyll-theme-tactile (0.2.0)

|

||||||

|

jekyll (> 3.5, < 5.0)

|

||||||

|

jekyll-seo-tag (~> 2.0)

|

||||||

|

jekyll-theme-time-machine (0.2.0)

|

||||||

|

jekyll (> 3.5, < 5.0)

|

||||||

|

jekyll-seo-tag (~> 2.0)

|

||||||

|

jekyll-titles-from-headings (0.5.3)

|

||||||

|

jekyll (>= 3.3, < 5.0)

|

||||||

|

jekyll-watch (2.2.1)

|

||||||

|

listen (~> 3.0)

|

||||||

|

jemoji (0.12.0)

|

||||||

|

gemoji (~> 3.0)

|

||||||

|

html-pipeline (~> 2.2)

|

||||||

|

jekyll (>= 3.0, < 5.0)

|

||||||

|

kramdown (2.3.2)

|

||||||

|

rexml

|

||||||

|

kramdown-parser-gfm (1.1.0)

|

||||||

|

kramdown (~> 2.0)

|

||||||

|

liquid (4.0.3)

|

||||||

|

listen (3.7.1)

|

||||||

|

rb-fsevent (~> 0.10, >= 0.10.3)

|

||||||

|

rb-inotify (~> 0.9, >= 0.9.10)

|

||||||

|

mercenary (0.3.6)

|

||||||

|

minima (2.5.1)

|

||||||

|

jekyll (>= 3.5, < 5.0)

|

||||||

|

jekyll-feed (~> 0.9)

|

||||||

|

jekyll-seo-tag (~> 2.1)

|

||||||

|

minitest (5.15.0)

|

||||||

|

multipart-post (2.1.1)

|

||||||

|

nokogiri (1.13.6-arm64-darwin)

|

||||||

|

racc (~> 1.4)

|

||||||

|

octokit (4.22.0)

|

||||||

|

faraday (>= 0.9)

|

||||||

|

sawyer (~> 0.8.0, >= 0.5.3)

|

||||||

|

pathutil (0.16.2)

|

||||||

|

forwardable-extended (~> 2.6)

|

||||||

|

public_suffix (4.0.7)

|

||||||

|

racc (1.6.0)

|

||||||

|

rb-fsevent (0.11.1)

|

||||||

|

rb-inotify (0.10.1)

|

||||||

|

ffi (~> 1.0)

|

||||||

|

rexml (3.2.5)

|

||||||

|

rouge (3.26.0)

|

||||||

|

ruby2_keywords (0.0.5)

|

||||||

|

rubyzip (2.3.2)

|

||||||

|

safe_yaml (1.0.5)

|

||||||

|

sass (3.7.4)

|

||||||

|

sass-listen (~> 4.0.0)

|

||||||

|

sass-listen (4.0.0)

|

||||||

|

rb-fsevent (~> 0.9, >= 0.9.4)

|

||||||

|

rb-inotify (~> 0.9, >= 0.9.7)

|

||||||

|

sawyer (0.8.2)

|

||||||

|

addressable (>= 2.3.5)

|

||||||

|

faraday (> 0.8, < 2.0)

|

||||||

|

simpleidn (0.2.1)

|

||||||

|

unf (~> 0.1.4)

|

||||||

|

terminal-table (1.8.0)

|

||||||

|

unicode-display_width (~> 1.1, >= 1.1.1)

|

||||||

|

thread_safe (0.3.6)

|

||||||

|

typhoeus (1.4.0)

|

||||||

|

ethon (>= 0.9.0)

|

||||||

|

tzinfo (1.2.9)

|

||||||

|

thread_safe (~> 0.1)

|

||||||

|

unf (0.1.4)

|

||||||

|

unf_ext

|

||||||

|

unf_ext (0.0.8.1)

|

||||||

|

unicode-display_width (1.8.0)

|

||||||

|

zeitwerk (2.5.4)

|

||||||

|

|

||||||

|

PLATFORMS

|

||||||

|

arm64-darwin-21

|

||||||

|

|

||||||

|

DEPENDENCIES

|

||||||

|

github-pages (~> 226)

|

||||||

|

jekyll-feed (~> 0.15.1)

|

||||||

|

minima (~> 2.5.1)

|

||||||

|

|

||||||

|

BUNDLED WITH

|

||||||

|

2.3.7

|

||||||

298

docs/INSTALL.md

298

docs/INSTALL.md

@ -1,283 +1,15 @@

|

|||||||

# Installing Dendrite

|

# Installation

|

||||||

|

|

||||||

Dendrite can be run in one of two configurations:

|

Please note that new installation instructions can be found

|

||||||

|

on the [new documentation site](https://matrix-org.github.io/dendrite/),

|

||||||

* **Monolith mode**: All components run in the same process. In this mode,

|

or alternatively, in the [installation](installation/) folder:

|

||||||

it is possible to run an in-process [NATS Server](https://github.com/nats-io/nats-server)

|

|

||||||

instead of running a standalone deployment. This will usually be the preferred model for

|

1. [Planning your deployment](installation/1_planning.md)

|

||||||

low-to-mid volume deployments, providing the best balance between performance and resource usage.

|

2. [Setting up the domain](installation/2_domainname.md)

|

||||||

|

3. [Preparing database storage](installation/3_database.md)

|

||||||

* **Polylith mode**: A cluster of individual components running in their own processes, dealing

|

4. [Generating signing keys](installation/4_signingkey.md)

|

||||||

with different aspects of the Matrix protocol (see [WIRING.md](WIRING-Current.md)). Components

|

5. [Installing as a monolith](installation/5_install_monolith.md)

|

||||||

communicate with each other using internal HTTP APIs and [NATS Server](https://github.com/nats-io/nats-server).

|

6. [Installing as a polylith](installation/6_install_polylith.md)

|

||||||

This will almost certainly be the preferred model for very large deployments but scalability

|

7. [Populate the configuration](installation/7_configuration.md)

|

||||||

comes with a cost. API calls are expensive and therefore a polylith deployment may end up using

|

8. [Starting the monolith](installation/8_starting_monolith.md)

|

||||||

disproportionately more resources for a smaller number of users compared to a monolith deployment.

|

9. [Starting the polylith](installation/9_starting_polylith.md)

|

||||||

|

|

||||||

In almost all cases, it is **recommended to run in monolith mode with PostgreSQL databases**.

|

|

||||||

|

|

||||||

Regardless of whether you are running in polylith or monolith mode, each Dendrite component that

|

|

||||||

requires storage has its own database connections. Both Postgres and SQLite are supported and can

|

|

||||||

be mixed-and-matched across components as needed in the configuration file.

|

|

||||||

|

|

||||||

Be advised that Dendrite is still in development and it's not recommended for

|

|

||||||

use in production environments just yet!

|

|

||||||

|

|

||||||

## Requirements

|

|

||||||

|

|

||||||

Dendrite requires:

|

|

||||||

|

|

||||||

* Go 1.16 or higher

|

|

||||||

* PostgreSQL 12 or higher (if using PostgreSQL databases, not needed for SQLite)

|

|

||||||

|

|

||||||

If you want to run a polylith deployment, you also need:

|

|

||||||

|

|

||||||

* A standalone [NATS Server](https://github.com/nats-io/nats-server) deployment with JetStream enabled

|

|

||||||

|

|

||||||

If you want to build it on Windows, you need `gcc` in the path:

|

|

||||||

|

|

||||||

* [MinGW-w64](https://www.mingw-w64.org/)

|

|

||||||

|

|

||||||

## Building Dendrite

|

|

||||||

|

|

||||||

Start by cloning the code:

|

|

||||||

|

|

||||||

```bash

|

|

||||||

git clone https://github.com/matrix-org/dendrite

|

|

||||||

cd dendrite

|

|

||||||

```

|

|

||||||

|

|

||||||

Then build it:

|

|

||||||

|

|

||||||

* Linux or UNIX-like systems:

|

|

||||||

```bash

|

|

||||||

./build.sh

|

|

||||||

```

|

|

||||||

|

|

||||||

* Windows:

|

|

||||||

```dos

|

|

||||||

build.cmd

|

|

||||||

```

|

|

||||||

|

|

||||||

## Install NATS Server

|

|

||||||

|

|

||||||

Follow the [NATS Server installation instructions](https://docs.nats.io/running-a-nats-service/introduction/installation) and then [start your NATS deployment](https://docs.nats.io/running-a-nats-service/introduction/running).

|

|

||||||

|

|

||||||

JetStream must be enabled, either by passing the `-js` flag to `nats-server`,

|

|

||||||

or by specifying the `store_dir` option in the the `jetstream` configuration.

|

|

||||||

|

|

||||||

## Configuration

|

|

||||||

|

|

||||||

### PostgreSQL database setup

|

|

||||||

|

|

||||||

Assuming that PostgreSQL 12 (or later) is installed:

|

|

||||||

|

|

||||||

* Create role, choosing a new password when prompted:

|

|

||||||

|

|

||||||

```bash

|

|

||||||

sudo -u postgres createuser -P dendrite

|

|

||||||

```

|

|

||||||

|

|

||||||

At this point you have a choice on whether to run all of the Dendrite

|

|

||||||

components from a single database, or for each component to have its

|

|

||||||

own database. For most deployments, running from a single database will

|

|

||||||

be sufficient, although you may wish to separate them if you plan to

|

|

||||||

split out the databases across multiple machines in the future.

|

|

||||||

|

|

||||||

On macOS, omit `sudo -u postgres` from the below commands.

|

|

||||||

|

|

||||||

* If you want to run all Dendrite components from a single database:

|

|

||||||

|

|

||||||

```bash

|

|

||||||

sudo -u postgres createdb -O dendrite dendrite

|

|

||||||

```

|

|

||||||

|

|

||||||

... in which case your connection string will look like `postgres://user:pass@database/dendrite`.

|

|

||||||

|

|

||||||

* If you want to run each Dendrite component with its own database:

|

|

||||||

|

|

||||||

```bash

|

|

||||||

for i in mediaapi syncapi roomserver federationapi appservice keyserver userapi_accounts; do

|

|

||||||

sudo -u postgres createdb -O dendrite dendrite_$i

|

|

||||||

done

|

|

||||||

```

|

|

||||||

|

|

||||||

... in which case your connection string will look like `postgres://user:pass@database/dendrite_componentname`.

|

|

||||||

|

|

||||||

### SQLite database setup

|

|

||||||

|

|

||||||

**WARNING:** SQLite is suitable for small experimental deployments only and should not be used in production - use PostgreSQL instead for any user-facing federating installation!

|

|

||||||

|

|

||||||

Dendrite can use the built-in SQLite database engine for small setups.

|

|

||||||

The SQLite databases do not need to be pre-built - Dendrite will

|

|

||||||

create them automatically at startup.

|

|

||||||

|

|

||||||

### Server key generation

|

|

||||||

|

|

||||||

Each Dendrite installation requires:

|

|

||||||

|

|

||||||

* A unique Matrix signing private key

|

|

||||||

* A valid and trusted TLS certificate and private key

|

|

||||||

|

|

||||||

To generate a Matrix signing private key:

|

|

||||||

|

|

||||||

```bash

|

|

||||||

./bin/generate-keys --private-key matrix_key.pem

|

|

||||||

```

|

|

||||||

|

|

||||||

**WARNING:** Make sure take a safe backup of this key! You will likely need it if you want to reinstall Dendrite, or

|

|

||||||

any other Matrix homeserver, on the same domain name in the future. If you lose this key, you may have trouble joining

|

|

||||||

federated rooms.

|

|

||||||

|

|

||||||

For testing, you can generate a self-signed certificate and key, although this will not work for public federation:

|

|

||||||

|

|

||||||

```bash

|

|

||||||

./bin/generate-keys --tls-cert server.crt --tls-key server.key

|

|

||||||

```

|

|

||||||

|

|

||||||

If you have server keys from an older Synapse instance,

|

|

||||||

[convert them](serverkeyformat.md#converting-synapse-keys) to Dendrite's PEM

|

|

||||||

format and configure them as `old_private_keys` in your config.

|

|

||||||

|

|

||||||

### Configuration file

|

|

||||||

|

|

||||||

Create config file, based on `dendrite-config.yaml`. Call it `dendrite.yaml`. Things that will need editing include *at least*:

|

|

||||||

|

|

||||||

* The `server_name` entry to reflect the hostname of your Dendrite server

|

|

||||||

* The `database` lines with an updated connection string based on your

|

|

||||||

desired setup, e.g. replacing `database` with the name of the database:

|

|

||||||

* For Postgres: `postgres://dendrite:password@localhost/database`, e.g.

|

|

||||||

* `postgres://dendrite:password@localhost/dendrite_userapi_account` to connect to PostgreSQL with SSL/TLS

|

|

||||||

* `postgres://dendrite:password@localhost/dendrite_userapi_account?sslmode=disable` to connect to PostgreSQL without SSL/TLS

|

|

||||||

* For SQLite on disk: `file:component.db` or `file:///path/to/component.db`, e.g. `file:userapi_account.db`

|

|

||||||

* Postgres and SQLite can be mixed and matched on different components as desired.

|

|

||||||

* Either one of the following in the `jetstream` configuration section:

|

|

||||||

* The `addresses` option — a list of one or more addresses of an external standalone

|

|

||||||

NATS Server deployment

|

|

||||||

* The `storage_path` — where on the filesystem the built-in NATS server should

|

|

||||||

store durable queues, if using the built-in NATS server

|

|

||||||

|

|

||||||

There are other options which may be useful so review them all. In particular,

|

|

||||||

if you are trying to federate from your Dendrite instance into public rooms

|

|

||||||

then configuring `key_perspectives` (like `matrix.org` in the sample) can

|

|

||||||

help to improve reliability considerably by allowing your homeserver to fetch

|

|

||||||

public keys for dead homeservers from somewhere else.

|

|

||||||

|

|

||||||

**WARNING:** Dendrite supports running all components from the same database in

|

|

||||||

PostgreSQL mode, but this is **NOT** a supported configuration with SQLite. When

|

|

||||||

using SQLite, all components **MUST** use their own database file.

|

|

||||||

|

|

||||||

## Starting a monolith server

|

|

||||||

|

|

||||||

The monolith server can be started as shown below. By default it listens for

|

|

||||||

HTTP connections on port 8008, so you can configure your Matrix client to use

|

|

||||||

`http://servername:8008` as the server:

|

|

||||||

|

|

||||||

```bash

|

|

||||||

./bin/dendrite-monolith-server

|

|

||||||

```

|

|

||||||

|

|

||||||

If you set `--tls-cert` and `--tls-key` as shown below, it will also listen

|

|

||||||

for HTTPS connections on port 8448:

|

|

||||||

|

|

||||||

```bash

|

|

||||||

./bin/dendrite-monolith-server --tls-cert=server.crt --tls-key=server.key

|

|

||||||

```

|

|

||||||

|

|

||||||

If the `jetstream` section of the configuration contains no `addresses` but does

|

|

||||||

contain a `store_dir`, Dendrite will start up a built-in NATS JetStream node

|

|

||||||

automatically, eliminating the need to run a separate NATS server.

|

|

||||||

|

|

||||||

## Starting a polylith deployment

|

|

||||||

|

|

||||||

The following contains scripts which will run all the required processes in order to point a Matrix client at Dendrite.

|

|

||||||

|

|

||||||

### nginx (or other reverse proxy)

|

|

||||||

|

|

||||||

This is what your clients and federated hosts will talk to. It must forward

|

|

||||||

requests onto the correct API server based on URL:

|

|

||||||

|

|

||||||

* `/_matrix/client` to the client API server

|

|

||||||

* `/_matrix/federation` to the federation API server

|

|

||||||

* `/_matrix/key` to the federation API server

|

|

||||||

* `/_matrix/media` to the media API server

|

|

||||||

|

|

||||||

See `docs/nginx/polylith-sample.conf` for a sample configuration.

|

|

||||||

|

|

||||||

### Client API server

|

|

||||||

|

|

||||||

This is what implements CS API endpoints. Clients talk to this via the proxy in

|

|

||||||

order to send messages, create and join rooms, etc.

|

|

||||||

|

|

||||||

```bash

|

|

||||||

./bin/dendrite-polylith-multi --config=dendrite.yaml clientapi

|

|

||||||

```

|

|

||||||

|

|

||||||

### Sync server

|

|

||||||

|

|

||||||

This is what implements `/sync` requests. Clients talk to this via the proxy

|

|

||||||

in order to receive messages.

|

|

||||||

|

|

||||||

```bash

|

|

||||||

./bin/dendrite-polylith-multi --config=dendrite.yaml syncapi

|

|

||||||

```

|

|

||||||

|

|

||||||

### Media server

|